The Role of LLM Bots to Empower Conversational AI Solutions

- August 28th, 2025 / 5 Mins read

-

Aarti Nair

Aarti Nair

The Role of LLM Bots to Empower Conversational AI Solutions

- August 28th, 2025 / 5 Mins read

-

Aarti Nair

Aarti Nair

Customer conversations are no longer predictable or linear — they’re dynamic, multi-layered, and often stretch across chat, voice, and multiple digital channels. Traditional scripted chatbots, designed around rigid flows and pre-set rules, struggle to keep up with this complexity. This is where AI Agents powered by Large Language Models (LLMs) step in.

Unlike earlier bots, LLM-powered AI Agents bring contextual understanding, adaptability, and the ability to handle nuance. They don’t just “follow scripts” — they listen, interpret intent, and respond with relevance, making interactions smoother for customers and more efficient for businesses.

The market signals the urgency of this shift. The AI Agents market was valued at USD 5.25 billion in 2024 and is projected to grow to USD 52.62 billion by 2030 at a CAGR of 46.3%. That level of growth reflects a widespread recognition: enterprises are moving beyond simple automation and adopting AI Agents as a strategic layer in their customer experience and operational frameworks.

In this blog, we’ll explore how LLM bots are becoming the backbone of conversational AI solutions — empowering AI Agents to move from scripted conversations to intelligent, intent-driven dialogues that scale across industries.

Conversational AI Solutions Overview

A Conversation Artificial Intelligence (AI) system easily understands the human core language via Natural Language Processing (NLP) & Machine Learning (ML) algorithms. With such a system, you get assistance 24×7 with instant responses & handle large volumes of inquiries in one-go. On the contrary, traditional Conversational AI systems may struggle to understand the nuances of human language that lead to inaccurate responses & poor performance. Furthermore, conventional AI solutions tend to be somewhat robotic and lack the human feel and interactions.

Suggested Reading: Conversational AI 101: Explained with Use Cases and Examples

What Are LLM Bots and How Do They Work?

At their core, LLM bots are conversational systems powered by Large Language Models (LLMs) such as GPT-style architectures. Unlike traditional chatbots, which follow predefined scripts or decision trees, LLM bots are trained on vast amounts of text data and can generate human-like responses by understanding context, intent, and nuance.

Think about a traditional chatbot that relies on keyword detection: if a customer types “I lost my card,” the bot might only match the word “card” and respond with a generic product description. In contrast, an LLM bot interprets the whole phrase, recognises it as a lost card scenario, and responds with relevant next steps like blocking the card or initiating a replacement request.

This deeper understanding is what makes LLM bots the intelligence layer behind AI Agents. They enable AI Agents to go beyond static FAQ-style interactions and engage in fluid conversations that adapt to user needs.

Here’s how they typically work:

-

Input Processing: Customer queries are transcribed (for voice) or captured (for chat).

-

Contextual Understanding: The LLM analyses the text, identifying intent, entities, and sentiment.

-

Dynamic Response Generation: Instead of a scripted answer, the model generates a context-aware, natural response.

-

Agentic Behaviour: The AI Agent can then take action: updating records, verifying identities, or even escalating to a human when required.

By combining this with enterprise data and integrations, LLM bots transform from being reactive responders into AI Agents capable of reasoning, problem-solving, and executing tasks.

In short, while chatbots can answer questions, LLM bots empower AI Agents to manage conversations — as if they were teammates rather than tools.

AI Agents vs. Traditional Chatbots: The Big Shift

Traditional Chatbots vs. AI Agents

| Aspect | Traditional Chatbots | AI Agents (LLM-Powered) |

|---|---|---|

| Core Approach | Rules-based, decision trees | Context-aware, powered by LLMs |

| Conversation Flow | Scripted, linear | Dynamic, adaptive, multi-turn |

| Understanding | Relies on keywords | Interprets intent, nuance, and sentiment |

| Handling Complexity | Struggles with layered or combined queries | Manages multiple intents in one conversation |

| Language Support | Limited, often one language | Multilingual, dialect-sensitive |

| Learning Ability | Manual updates needed | Continuously improves with training data |

| User Experience | Feels robotic, restrictive | Feels natural, human-like, and fluid |

| Business Role | Tactical tool for FAQs | Strategic asset for CX and automation |

For years, chatbots were the face of automation. They offered businesses a way to answer FAQs, handle simple queries, and reduce the load on human teams. But their strength was also their biggest limitation: they were rules-based. Each possible question had to be anticipated in advance, with rigid decision trees dictating every response.

This rigidity meant that chatbots often stumbled when customers asked questions outside their scripted flow. For instance, if a user typed “I need to change my flight ticket and add extra baggage,” a traditional chatbot might fail because it was only trained to recognise “change ticket” or “baggage” separately — not as a combined intent.

Enter AI Agents powered by LLM bots. Unlike static chatbots, AI Agents are adaptive, context-aware, and capable of handling layered intent. In the same scenario, an AI Agent would parse the request, identify both intents (ticket change + extra baggage), and guide the customer seamlessly through both actions in one conversation.

This is why enterprises are moving from “chatbots” to “agents.” The difference isn’t just about smarter answers; it’s about delivering human-like conversation flows that reflect how people naturally communicate. AI Agents don’t just react — they understand, anticipate, and act, making them strategic assets in customer experience rather than tactical tools.

The shift is clear: businesses no longer want bots that answer questions; they want AI Agents that solve problems.

Role of LLM Bots in Conversational AI

Using LLM Bots in Conversational AI systems increases their ability to understand human languages and its nuances.

- With the utilisation of sophisticated NLP & ML algorithms, LLM Bots possess the capacity to meticulously analyse human language & deliver precise responses.

- These algorithms consequently equip LLM Bots with an understanding of context —a comprehension that might prove challenging for conventional Conversational AI systems.

The strength of LLM bots lies in their ability to power AI Agents that don’t just answer questions but hold meaningful, human-like conversations. They make conversational AI more intelligent, more adaptable, and ultimately, more useful for both customers and businesses. Here’s how:

1. Contextual Understanding

One of the biggest limitations of traditional chatbots is their short memory. They often treat each question in isolation, which makes conversations clunky. LLM bots solve this by remembering context and maintaining continuity.

Imagine a customer interacting with an airline’s AI Agent. They first ask: “Can I change my flight to tomorrow?” and then follow up with “And add a meal preference too?”

-

A traditional chatbot may fail because it doesn’t link the two queries.

-

An LLM-powered AI Agent understands that both requests relate to the same booking, recalls the earlier context, and responds: “Sure, I’ll change your flight to tomorrow and add your meal preference. Would you like vegetarian or non-vegetarian?”

This contextual awareness makes conversations smoother and avoids forcing customers to repeat themselves.

2. Multilingual Support

In regions like the Middle East and North Africa (MENA), conversations don’t stick to one language or dialect. Customers may switch between Arabic dialects and English in a single sentence. LLM bots, trained on diverse datasets, can recognise and respond naturally across these variations.

Let’s say a customer in Dubai types on WhatsApp: “مرحبا، I want to check my account balance.”

-

A rules-based chatbot may struggle to understand this mix of Arabic and English.

-

An LLM-powered AI Agent interprets the intent correctly, replying: “Welcome! Your balance is AED 12,400. Would you like me to send you a mini-statement?”

This ability to handle code-switching and dialects makes AI Agents far more inclusive and effective for global businesses.

3. Voice + Chat Integration

Customer interactions today aren’t limited to text. Many prefer voice calls for speed and convenience. LLM bots enable voice AI agents to converse naturally, detect interruptions, and adapt mid-conversation — just like a human agent would.

A telecom customer calls a support line and says: “My internet is not working… oh wait, it just came back, but it’s very slow.”

-

A simple IVR would fail because it expects a fixed input.

-

A voice AI Agent powered by an LLM adjusts instantly: “I understand. Your connection is active now but running slow. Would you like me to run a quick speed test for you?”

By handling overlapping speech and incomplete sentences, LLM-powered voice AI Agents create a truly human-like experience.

4. Personalisation

Customers don’t just want correct answers — they want interactions that feel tailored to them. LLM bots empower AI Agents to adapt tone, style, and even recommendations based on user history or preferences.

A returning e-commerce customer asks: “Show me shoes under ₹2,000.”

-

A static chatbot might simply list generic results.

-

An AI Agent with LLM capabilities recalls past purchases and replies: “Last time you picked Adidas sneakers. Here are some similar options under ₹2,000 — do you want me to filter by running or casual?”

This personalised experience makes customers feel recognised and valued, increasing satisfaction and loyalty.

5. Scalability

Enterprises often face thousands, sometimes millions, of customer interactions daily. Scaling human teams alone is impractical. LLM bots give AI Agents the ability to handle high-volume conversations while still maintaining accuracy and quality.

During a bank’s loan campaign, thousands of customers inquire at the same time: “Am I eligible for a personal loan?”

-

Without automation, this would overwhelm agents.

-

An AI Agent powered by an LLM can simultaneously answer every query, pull personalised eligibility information, and only escalate complex cases to human staff.

This ensures speed, consistency, and reliability at scale — something human teams alone can’t achieve.

In all these ways, LLM bots transform conversational AI from being a static FAQ tool into a dynamic AI Agent ecosystem that listens, understands, and acts with intelligence.

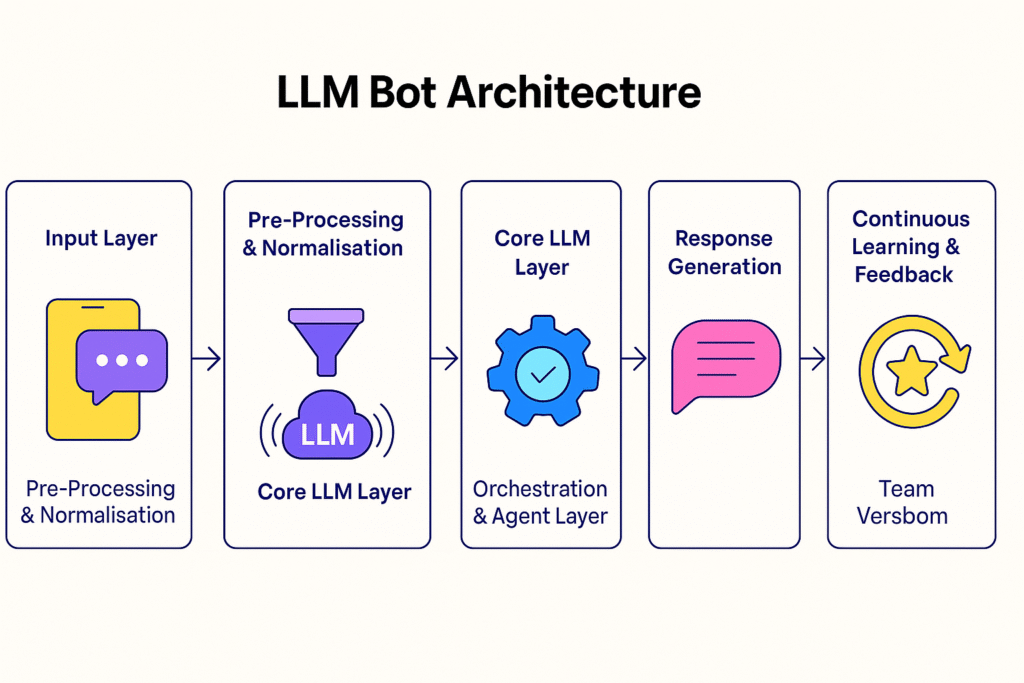

LLM Bot Architecture

As we are aware of the traditional systems also utilising the Natural Language Processing (NLP) Engine and a Machine Learning (ML) model, the introduction of LLMs has evolved their usage. The NLP Engine is for processing & analysing human language input. On the other hand, the ML model learns from the input data & continuously improves the bot’s performance.

The anatomy of an LLM Bot has got a few different components other than the traditional components used in a conversation management system. All in all, an automated conversation management system manages the bot’s dialogue with users & determines how to respond to their input.

Behind every smooth AI Agent conversation is a layered architecture that combines intelligence, adaptability, and enterprise integration. An LLM bot architecture is not just about plugging in a model — it’s about orchestrating multiple components that work together to deliver natural, context-aware, and secure interactions.

Here’s how the architecture typically looks:

1. Input Layer – Capturing the User’s Request

This is where the customer query enters the system — either through chat (web, WhatsApp, in-app) or voice (calls). For voice, Automatic Speech Recognition (ASR) converts spoken words into text.

A customer says on a call: “I want to increase my credit limit.” ASR transcribes it into text before passing it to the next stage.

2. Pre-Processing & Normalisation

Human speech and text are messy — full of filler words, spelling variations, or background noise. The system cleans and standardises input so the LLM can process it accurately.

The spoken phrase “uh… can I maybe, you know, raise my limit?” is normalised into “Can I raise my credit limit?” before analysis.

3. Core LLM Layer – Understanding & Reasoning

The Large Language Model is the brain of the bot. It interprets intent, extracts entities (like names, account numbers, amounts), and understands sentiment or urgency. This is where the bot moves beyond keyword matching to true context-aware reasoning.

The LLM detects the intent (credit limit increase), sentiment (hesitant but curious), and entity (credit limit).

4. Orchestration & Agent Layer

This is where the AI Agent logic comes in. The LLM doesn’t just generate text; it interacts with APIs, databases, and workflows to perform real actions. Orchestration rules decide whether to:

-

Provide an instant answer.

-

Fetch data from backend systems.

-

Trigger a transaction (like a refund).

-

Escalate to a human agent.

The AI Agent checks customer eligibility via the bank’s backend system and responds accordingly.

5. Response Generation

Once the action is complete, the response is crafted in a natural, brand-aligned tone. For voice channels, Text-to-Speech (TTS) converts it back into speech.

The AI Agent replies: “You’re eligible for a credit limit increase of ₹50,000. Shall I proceed with the update?” in a professional yet friendly voice.

6. Continuous Learning & Feedback

Every interaction feeds back into the system. AI Agents learn from past conversations, agent interventions, and user ratings to improve performance over time.

If multiple users say “top up my card” instead of “increase my limit,” the system learns to map that phrase to the same intent.

A well-designed LLM bot architecture ensures that AI Agents are not just smart but also scalable, secure, and enterprise-ready. It combines the raw power of LLMs with governance, compliance, and integrations businesses rely on. Without this layered design, enterprises risk running into problems with latency, accuracy, or data security.

Use Cases of LLM Bots in Conversational AI Solutions

LLM Bots can work in different kinds of industries. To name a few, we have healthcare, banking, retail, etc.

- In the healthcare industry, LLM Bots are virtual assistants to schedule appointments, answer basic queries & provide personalised care to patients.

- For the banking sector, LLM Bots process loan applications, answer most of the inquiries about account issues & offer financial advice.

- Conversational AI solutions can also thrive in the retail industry with automated customer service. It is easy & quick for customers to navigate through online stores, place orders, make returns & handle other requests.

Key Benefits of LLM-Powered AI Agents for Businesses

Adopting LLM-powered AI Agents is no longer an experimental step — it’s becoming a competitive necessity. Businesses that embed these agents into their customer experience strategy are already seeing measurable gains in efficiency, satisfaction, and long-term loyalty. A recent PwC survey highlights this urgency: 73% of respondents believe how they use AI Agents will give them a significant competitive advantage in the next 12 months, and 75% say they feel confident in their company’s AI Agent strategy.

Here’s how these benefits play out in practice:

1. Faster Response Times → Improved CSAT and NPS

LLM-powered AI Agents handle queries instantly, cutting down wait times that frustrate customers. Instead of holding in a queue or navigating multiple menus, customers get relevant answers in seconds.

If a telecom customer wants to check data balance. A traditional support line might involve IVR menus and long hold times. An AI Agent resolves it instantly with “You have 3.2GB remaining. Would you like to top up now?” — boosting CSAT and Net Promoter Scores.

2. Reduced Operational Costs

By automating high-volume, repetitive queries, AI Agents free human agents to focus on complex, revenue-impacting tasks. This reduces staffing pressure and lowers the overall cost per interaction without compromising service quality.

For instance in e-commerce, 70% of incoming chats are about order status. AI Agents can automatically track and share updates, allowing human agents to concentrate on upselling, handling complaints, or resolving edge cases.

3. Seamless Human Handover for Complex Cases

LLM bots give AI Agents the intelligence to recognise when a query is too complex, emotional, or compliance-heavy to handle alone. They then transfer the interaction to a human agent — while retaining context — so customers don’t have to repeat themselves.

Lets’s say a bank customer disputes an unauthorised transaction. The AI Agent collects details and detects frustration in the tone. Instead of looping, it escalates with a message: “I’ll connect you to a support specialist who already has your case details.” Smooth handover strengthens customer trust.

4. Industry-Wide Use Cases

LLM-powered AI Agents aren’t limited to one sector; they’re reshaping experiences across industries:

-

BFSI: Automating KYC, loan eligibility checks, and fraud alerts.

-

Healthcare: Assisting patients with appointment scheduling, prescription reminders, and report access.

-

E-commerce & Retail: Handling returns, refunds, product recommendations.

-

Telecom: Managing billing queries, outage notifications, and proactive upgrades.

In each case, AI Agents reduce workload, improve customer satisfaction, and open new opportunities for revenue.

LLM-powered AI Agents are not just about efficiency — they’re about creating business resilience and competitive edge. With organisations increasingly confident in their AI Agent strategies, the question is no longer if but how fast you can scale them.

Challenges LLM Bots in Conversational AI Solutions

Although LLM Bots have revolutionised Conversational AI, a number of challenges remain.

- Lack of emotional intelligence

- Existence of a few language barriers

- Limited & shorter domain knowledge

To quote more, these above challenges also mean different types of opportunities for future research & development in Conversational AI solutions & LLM bot models.

To put everything in context, what is the actual significance of LLM Bots in Conversational AI Solutions? Here is all we can summarise.

Language Learning Models in Conversational AI solutions help to:

- Experience a better level of customer service

- Engage users with human like conversation with human like conversation flow

- Automate language learning & practice

- Boost efficiency in language translation

- Better accessibility for the non-native speakers

- Ease communication in international & global collaborations

Considerations in Deploying LLM Bots

While the potential of LLM-powered AI Agents is undeniable, successful deployment requires careful planning. Without the right guardrails, businesses can encounter challenges that impact customer experience and trust. Here are key considerations every organisation should weigh:

1. Data Privacy and Compliance

LLM bots often process sensitive customer information — from financial details to health records. Ensuring compliance with regulations like GDPR, SOC 2, HIPAA, or CITC is non-negotiable. Enterprises must control how data is stored, anonymised, and shared with AI systems.

A healthcare provider using an AI Agent to deliver lab results must verify that no personal data is exposed outside authorised systems. Otherwise, even a minor slip could trigger legal action and erode patient trust.

2. Latency in Voice Interactions

In chat, a small delay might go unnoticed, but in voice AI agents, even 500 milliseconds can feel like an awkward pause. High latency disrupts conversational flow, making customers feel like they’re speaking to a machine rather than a responsive agent.

When a customer calls their bank’s AI Agent to check loan eligibility. A 2–3 second delay between question and response causes interruptions, forcing the customer to repeat themselves. Smooth, real-time responses are essential for adoption.

3. Bias and Accuracy in Multilingual Support

LLMs trained on limited or skewed datasets risk producing biased or inaccurate results — particularly in dialects or regional languages. Without careful dataset curation and continuous fine-tuning, AI Agents may misunderstand cultural nuances.

In the Middle East, a user typing in Gulf Arabic might get incorrect responses if the bot was primarily trained on Modern Standard Arabic, leading to poor customer experience.

4. Cost of Training and Maintenance

While pre-trained LLMs are powerful, adapting them to industry-specific use cases requires fine-tuning, integrations, and ongoing monitoring. These efforts add cost and complexity. Businesses must weigh ROI carefully to avoid overspending without measurable gains.

A retail chain may invest heavily in customising an AI Agent for product recommendations but, without proper monitoring, it may struggle with accuracy, increasing returns instead of boosting sales.

5. Human Oversight and Handover

Even the best LLM bots can’t resolve every query. Without clear human handover protocols, customers risk being stuck in frustrating loops. Designing escalation pathways ensures that AI Agents augment humans rather than isolate customers.

An insurance AI Agent can collect details for a claim but should seamlessly route complex disputes to a human specialist — complete with context — instead of asking the customer to repeat themselves.

In short, deploying LLM bots isn’t just about plugging in a model. It requires thoughtful design, compliance alignment, and ongoing iteration to ensure AI Agents deliver trustworthy, fast, and human-like experiences at scale.

Best Practices for Deploying LLM Bots Successfully

To overcome these considerations and make AI Agents effective, enterprises should follow a structured approach:

-

Prioritise Compliance from Day One

Build data protection and regulatory checks (GDPR, SOC 2, HIPAA, CITC) into your AI workflows. -

Optimise for Low Latency

Especially for voice AI agents — use lightweight models, caching, and efficient pipelines to keep responses under 500 ms. -

Invest in Multilingual Training

Fine-tune on diverse datasets, including dialects, slang, and region-specific phrases, to avoid bias and improve accuracy. -

Measure ROI Continuously

Track KPIs like CSAT, FCR (First Contact Resolution), and cost per interaction to ensure business value outweighs training and maintenance costs. -

Design Human Handover Paths

Always include clear escalation routes with context handover to human agents for complex cases. -

Iterate with Feedback Loops

Use customer interactions, agent notes, and analytics to refine the AI Agent continuously.

By following these practices, businesses can ensure that their LLM-powered AI Agents don’t just automate conversations — they deliver secure, scalable, and human-like interactions that build long-term trust.

The Future of Conversational AI with LLM Bots

The rise of LLM-powered AI Agents has already reshaped customer support and automation, but this is only the beginning. In the coming years, these agents will move beyond reactive responses to become proactive, omnichannel, and collaborative partners in customer journeys.

One of the clearest shifts will be the rise of predictive AI Agents. Instead of waiting for a query, these agents will anticipate needs based on customer behaviour and patterns. A telecom provider, for instance, could have its AI Agent notify a customer that they are close to exceeding their data plan and offer an upgrade before service slows. This transforms the interaction from a transaction into a moment of genuine support.

Another important development is omnichannel orchestration. Customers today don’t stick to one platform — they might start a chat on WhatsApp, move to a voice call, and expect continuity. LLM bots make it possible for AI Agents to carry context seamlessly across these channels. A refund request that starts in chat can continue on a call without forcing the customer to repeat details, delivering a truly connected experience.

The relationship between humans and AI will also evolve into collaboration rather than replacement. AI Agents will act as copilots, giving live suggestions, pulling knowledge snippets, or even summarising conversations for agents in real time. In financial services, for example, a human agent discussing a client’s investment portfolio might receive instant compliance guidance from the AI Agent, ensuring faster and more accurate resolutions.

We can also expect industry-specific AI Agents to emerge. Instead of generic bots, enterprises will use domain-trained models tailored to healthcare, BFSI, retail, or telecom. A healthcare provider’s AI Agent, trained on medical terminology and compliance standards, could verify patient identities, share lab results, and detect urgency in patient queries — all with accuracy that a general-purpose model cannot match.

Finally, the conversation around AI will increasingly focus on ethics and trust. As AI Agents take on more critical roles, customers will want transparency in how decisions are made. Imagine an e-commerce AI Agent recommending a product and adding, “This suggestion is based on your past purchases and recent searches.” That kind of explainability builds confidence and strengthens loyalty.

The future of conversational AI lies not just in smarter conversations but in AI Agents that are proactive, seamless across channels, collaborative with humans, tailored to industries, and guided by responsible AI principles. Enterprises that embrace this future will not only improve efficiency but also differentiate themselves in how they build trust with customers.

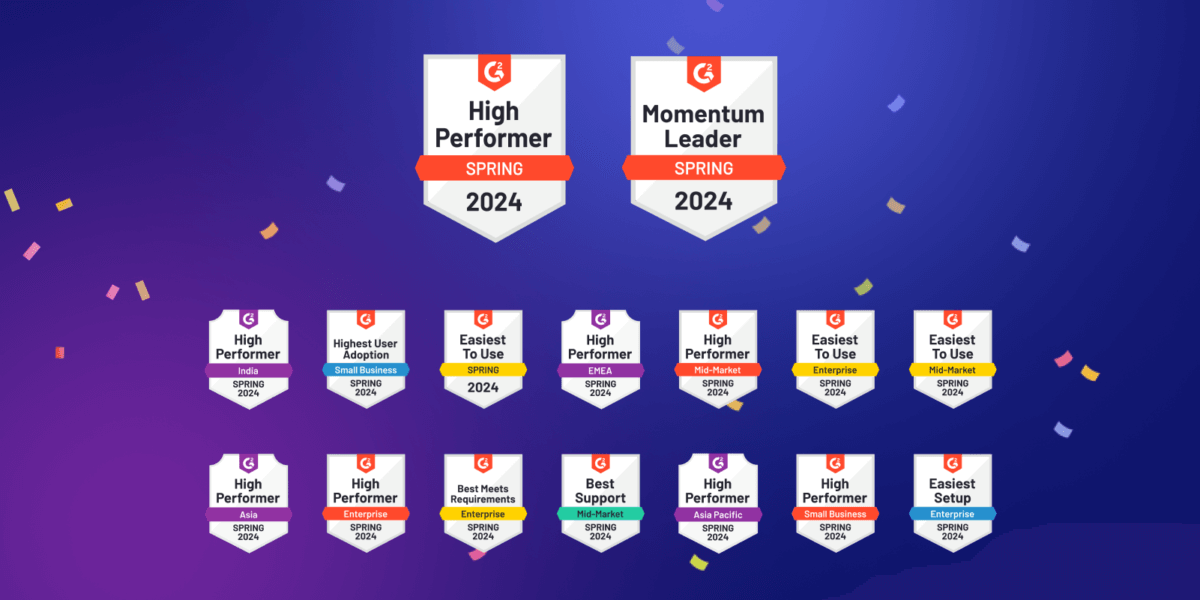

Choose Verloop.io’s Solutions for Your Business Today

LLM Bots are making waves with the introduction to conversational AI solutions.

Along with the improvement in the capabilities & algorithms of traditional systems, they understand human language at a much deeper level, ensuring that every customer is heard and acknowledged. LLM Bots use advanced NLP & ML algorithms with full improvement in the accuracy & relevance of responses. Low operational costs and more efficiency lead to better customer service.

As we move forward, it’s evident that LLM Bots will continue to evolve and play an increasingly pivotal role in revolutionizing communication across industries. Organisations looking to stay ahead of the curve should consider partnering with experts in the field, like Verloop.io, to unlock the full potential of LLM Bots and harness the benefits they bring.

So why wait?

Schedule a demo with Verloop.io today and embark on a journey to enhance your customer experiences and drive efficiency in your business operations. The future of Conversational AI solutions is here, and it’s powered by LLM. Don’t miss out on the opportunity to lead the way in your industry.

FAQs on LLM Bots and AI Agents

Q1. What is the LLM model for chatbot?

The LLM model for chatbot is a top-notch advancement for generating conversations. It’s based on the GPT large language model & has the capability to understand & respond to a wide variety of texts.

Q2. How does a LLM AI work?

The LLM AI chatbot works by utilising the different large language models available to process natural language inputs & predict the next word based on its previous analysis. AI Solutions rely on this computer algorithm for functionality & communication with users.

Q3. What are LLM bots in conversational AI?

LLM bots are conversational systems powered by Large Language Models (LLMs) that can understand context, interpret intent, and generate human-like responses. Unlike rule-based chatbots, they enable AI Agents to handle complex, multi-turn conversations across chat and voice channels.

Q4. How do AI Agents differ from traditional chatbots?

Traditional chatbots follow scripted flows and rely heavily on keywords. AI Agents, powered by LLM bots, adapt to real-time inputs, manage layered intents, and provide natural, context-aware conversations. This makes them capable of solving problems rather than just answering pre-set questions.

Q5. Can LLM bots power both chat and voice AI Agents?

Yes. LLM bots are channel-agnostic and can drive AI Agents across text and voice. For chat, they interpret text directly, while for voice, they work alongside Automatic Speech Recognition (ASR) and Text-to-Speech (TTS) systems to deliver natural, human-like voice interactions.

Q6. What are the key benefits of LLM-powered AI Agents for businesses?

They improve response times, reduce operational costs, provide personalised experiences, and enable seamless human handovers for complex cases. A PwC survey found that 73% of businesses see AI Agents as a competitive advantage, and 75% are confident in their AI Agent strategy — showing how critical they are for business growth.

Q7. What challenges should companies consider when deploying LLM bots?

Key considerations include ensuring compliance with data privacy laws, reducing latency in voice AI conversations, preventing bias in multilingual responses, managing the cost of fine-tuning, and designing clear human handover processes.

Q8. Which industries benefit most from LLM-powered AI Agents?

BFSI, healthcare, retail, telecom, and e-commerce are leading adopters. For example, banks use AI Agents for KYC checks and fraud detection, healthcare providers for appointment scheduling and report delivery, and retailers for returns, refunds, and product recommendations.