Understanding Arabic LLM Models

- August 25th, 2025 / 5 Mins read

-

Aarti Nair

Understanding Arabic LLM Models

- August 25th, 2025 / 5 Mins read

-

Aarti Nair

Debunking Arabic LLM Models

Have you ever tried using a chatbot in Arabic and felt the response just didn’t capture what you meant? Or noticed how English-based AI models seem far more accurate than their Arabic counterparts? If so, you’ve experienced first-hand the gap in how AI understands languages.

Arabic is spoken by more than 400 million people across 25+ countries, yet most large language models (LLMs) are trained with English data at their core. That leaves a huge question: how can businesses, governments, and educators in the MENA region truly benefit from AI if the technology doesn’t “speak” their language?

From powering customer support agents in Gulf-based banks, to building e-learning platforms for schools in Egypt, to making search engines and translations more relevant across dialects — the potential of Arabic LLMs is massive. But unlocking this potential requires more than just running Arabic through an English-trained model. It means addressing the linguistic richness, dialectal variety, and cultural nuances unique to Arabic.

So, what exactly is an Arabic LLM?

Why does the region need language-specific models when multilingual ones already exist? And more importantly, what does it take to create one?

In this blog, we’ll explore these questions — breaking down the importance, challenges, and opportunities in building Arabic LLMs, and why doing so is critical for AI adoption across MENA and beyond.

What is an Arabic LLM?

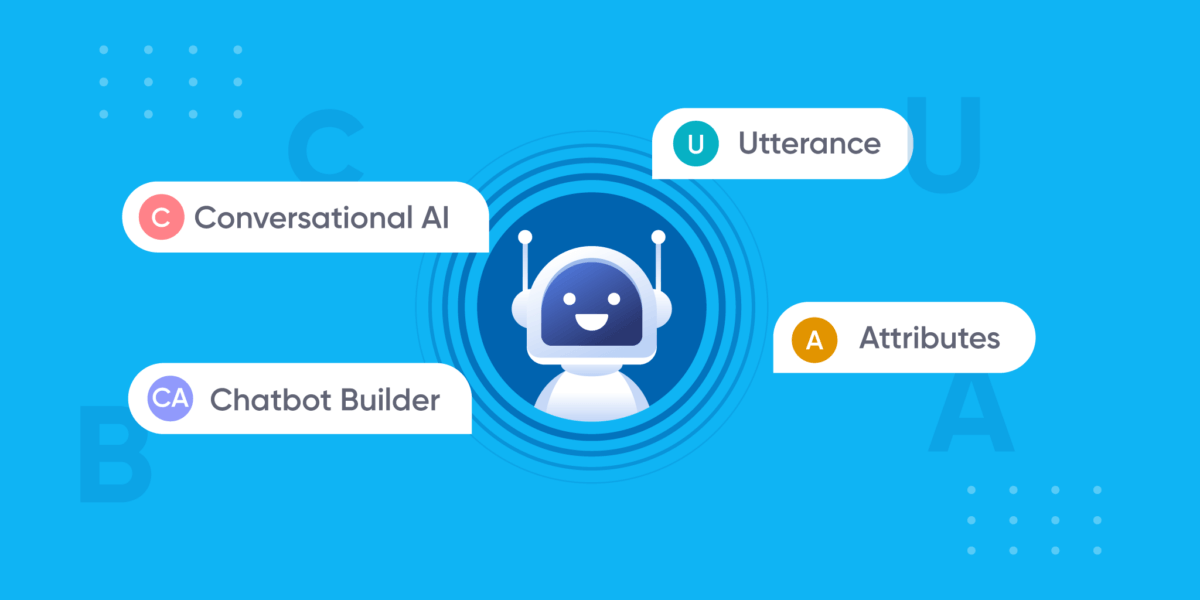

To understand what an Arabic LLM is, let’s start with the basics. A Large Language Model (LLM) is an advanced AI system trained on massive amounts of text to understand, generate, and predict human language. Popular examples you may know — like GPT or BERT — are typically trained on English-dominant datasets. That’s why they perform exceptionally well in English, but often fall short when tested with Arabic.

So, where does an Arabic LLM fit in? Unlike general-purpose or multilingual models, an Arabic AI model is built with a focus on the unique structure, script, and dialects of the Arabic language. Arabic isn’t just one language — it’s a collection of dialects like Egyptian, Gulf, Levantine, and Maghrebi, alongside Modern Standard Arabic (MSA) used in formal settings. Training a model specifically for Arabic helps it capture the nuances that multilingual models tend to gloss over.

Why is this so important? Imagine asking a multilingual AI to interpret the Arabic word “كتاب” (kitab). Depending on context, it could mean book, scripture, or even record. Without enough Arabic-specific training data, the model may pick the wrong meaning. Arabic LLMs minimise these errors by learning directly from large, curated Arabic datasets — covering everything from news media and literature to social media conversations.

Over the past few years, researchers have introduced powerful Arabic NLP models to fill this gap. Examples include:

AraBERT: modelled after Google’s BERT but trained on Arabic text.

AraGPT2: a GPT-2 adaptation fine-tuned for Arabic text generation.

CAMeL: a toolkit for morphological analysis and NLP tasks in Arabic.

MARBERT: designed for understanding both Modern Standard Arabic and dialectal Arabic from social media.

These models represent the foundation of Arabic LLM development, but the field is still young compared to English. That’s why creating dedicated Arabic LLMs is not just a technical curiosity — it’s an essential step for making AI useful, inclusive, and truly global.

Why Do We Need Arabic-Specific LLMs?

If multilingual AI models like GPT or BERT already support dozens of languages, you might wonder: why do we need LLMs built specifically for Arabic? The answer lies in the unique richness and complexity of the Arabic language — and the fact that generic models often miss the mark when it comes to cultural and linguistic accuracy.

First, Arabic is not a single, uniform language. While Modern Standard Arabic (MSA) is used in news, government, and education, everyday conversations take place in dialects like Egyptian, Gulf, Levantine, or Maghrebi. Each dialect has its own vocabulary, grammar quirks, and even different spellings for the same word. A model trained without attention to this diversity can easily misinterpret what a user is asking.

Second, cultural and contextual accuracy is critical. English-trained models may translate Arabic literally but fail to capture meaning. For example, an idiom like “يد واحدة لا تصفق” (literally “one hand cannot clap”) means “teamwork is essential.” Without Arabic-specific training, an AI might return nonsense instead of the intended wisdom. The same applies to slang, religious phrases, and local expressions that carry heavy cultural context in MENA markets.

Third, there’s a growing business and government demand for Arabic-first AI. From GCC governments digitising citizen services, to banks and insurers automating customer support, to telecom operators deploying Arabic voice assistants, organisations across the region need models that can interact natively and fluently. In education, e-learning platforms are increasingly turning to Arabic LLMs to personalise content for students and support teachers in local classrooms.

Consider the difference this makes in practice: a bank’s chatbot trained on English-centric data might struggle to handle a Gulf customer’s request phrased in colloquial Arabic, while an Arabic-specific LLM can respond naturally and correctly. This isn’t just a matter of convenience — it directly impacts customer trust, satisfaction, and brand loyalty.

In short, Arabic LLMs aren’t just a nice-to-have. They’re the backbone for creating reliable, context-aware AI applications across MENA, from chatbots and voice assistants to education and government platforms.

Challenges in Building Arabic LLMs

Creating an Arabic Large Language Model isn’t as simple as translating English datasets into Arabic. The language comes with unique structural, cultural, and technical hurdles that make training AI far more complex. Let’s explore the biggest challenges developers face.

1. Complex Morphology

Arabic is a root-based language where a single root can generate dozens of words with different meanings depending on prefixes, suffixes, and patterns. For example, the root ك-ت-ب (k-t-b) can produce kitab (book), maktab (office), katib (writer), and maktaba (library). For an AI model, this morphological richness makes tokenisation and meaning extraction much harder than in languages like English.

2. Dialectal Diversity

Unlike English, which has relatively minor regional variations, Arabic splits into 30+ dialects that differ so much they can almost be considered separate languages. Egyptian Arabic, Gulf Arabic, and Levantine Arabic each use unique words, slang, and grammar. A sentence that makes perfect sense in Cairo might confuse someone in Riyadh. Training an LLM to handle this variety requires massive datasets across multiple dialects, which are not always available.

3. Script and Orthography

Arabic is written right-to-left and often without diacritics (short vowel markings). This creates ambiguity. For example, the word كتب could mean kataba (he wrote), kutub (books), or kitab (book), depending on context. Humans can resolve this easily, but for AI, missing diacritics create major interpretation challenges. Additionally, elongated writing styles in social media (لاااااا for emphasis) further complicate preprocessing.

4. Low-Resource Problem

Compared to English, Arabic lacks the same scale of clean, labelled datasets. While billions of English web pages are available for training, Arabic resources are fewer, fragmented, and often domain-specific (e.g., news, religious texts). This shortage makes it difficult to build robust models, particularly for conversational AI where informal dialectal data is critical.

5. Bias and Representation

When datasets are collected unevenly, LLMs may become biased toward a particular dialect or region. For example, if most of the training data comes from Egypt, the AI might default to Egyptian Arabic, alienating Gulf or Maghrebi speakers. This not only reduces accuracy but also risks excluding entire communities from AI-driven services.

Each of these hurdles affects how well an Arabic LLM can understand intent, generate text, and provide accurate responses. Without addressing morphology, dialects, orthography, and data scarcity, businesses risk deploying AI that frustrates rather than helps. That’s why specialised approaches — from advanced tokenisation methods to dialect-focused data collection — are crucial for making Arabic LLMs truly effective.

How to Create an Arabic LLM (Step-by-Step)

Building an Arabic Large Language Model isn’t just about applying existing English-based frameworks. It requires a carefully structured approach that respects the language’s morphology, dialects, and cultural nuances. Here’s a step-by-step look at how developers and researchers can create effective Arabic LLMs.

1. Data Collection

Every LLM begins with data. For Arabic, this means gathering large-scale corpora from diverse sources:

News outlets and government publications (for Modern Standard Arabic).

Social media platforms like Twitter or Facebook (for dialects and informal use).

Religious texts, literature, and Wikipedia (for depth and context).

User-generated content (for real-world, conversational style).

The more balanced the data across dialects, the better the model will perform across regions.

2. Data Cleaning & Normalisation

Arabic text often contains inconsistencies such as missing diacritics, inconsistent transliterations (e.g., Ramadan vs. Ramadhan), and elongated words for emphasis (لاااااا). Preprocessing must handle:

Removing noise (hashtags, emojis, irrelevant symbols).

Standardising orthography (unifying spellings and diacritics).

Normalising dialectal variations where possible.

Without cleaning, the model risks learning incorrect or inconsistent patterns.

3. Preprocessing & Tokenisation

Tokenisation is particularly tricky in Arabic due to its rich morphology. A single root can create many forms with different prefixes/suffixes. Advanced tokenisers like Farasa or MADAMIRA are often used to break words into meaningful components. Proper preprocessing ensures that the LLM doesn’t treat variations of the same root word as entirely different terms.

4. Model Training

Once data is ready, training begins using architectures like transformers (BERT, GPT-style models). For Arabic, researchers often start by pre-training a base model (e.g., AraBERT, AraGPT2) and then fine-tune it with domain-specific data. Training involves handling:

Morphological richness.

Balancing Modern Standard Arabic (MSA) and dialects.

Managing long text sequences due to word structure.

This stage requires powerful GPUs/TPUs and significant compute resources.

5. Evaluation & Benchmarking

Accuracy is tested using benchmarks like:

BLEU score for translation quality.

Perplexity for language prediction accuracy.

Intent recognition tests for conversational AI.

Dialect classification accuracy to see how well the model distinguishes between Arabic dialects.

A model that performs well in MSA but poorly in dialects may still fail in real-world applications.

6. Fine-Tuning for Industries

Finally, Arabic LLMs are fine-tuned for specific domains:

Finance: understanding terms like zakat, Murabaha, or Sharia compliance.

Healthcare: interpreting patient-doctor conversations in local dialects.

Customer Support: powering Arabic chat and voice AI agents to handle queries across MENA.

Fine-tuning ensures the LLM isn’t just linguistically correct but also contextually relevant for the industry it serves.

Each of these steps — from data collection to fine-tuning — ensures that Arabic LLMs don’t just translate but actually understand. Done right, the result is an AI system that can converse naturally across dialects, provide accurate answers, and scale to real-world business needs.

Tools, Frameworks, and Datasets for Arabic LLM

Building an Arabic Large Language Model isn’t just about having powerful hardware or a clever algorithm. The real foundation lies in the tools, frameworks, and datasets that support the entire process. Think of them as the building blocks: frameworks provide the infrastructure to design and train models, pre-trained models give you a head start, datasets supply the knowledge the model learns from, and annotation tools help manage the complexity of Arabic’s unique grammar and structure.

Let’s look at these categories in detail.

Frameworks: The Infrastructure for Training Models

Frameworks are the core engines that let developers build and train LLMs. They provide the architecture and flexibility needed to experiment with Arabic-specific challenges like tokenisation, morphology, and diacritics.

Hugging Face Transformers: A widely used library for transformer-based models such as BERT, GPT, and T5. It offers pre-built components and Arabic-ready models, making it easier to adapt state-of-the-art architectures for Arabic tasks.

TensorFlow & PyTorch: The two most popular deep learning frameworks. PyTorch, with its flexibility, is favoured for research on Arabic NLP, while TensorFlow often powers production-ready deployments.

AllenNLP: Helpful for building custom NLP pipelines. It’s particularly useful for researchers experimenting with new approaches to Arabic tokenisation or intent recognition.

Pre-Trained Arabic NLP Models: Head Starts for Developers

Training an LLM from scratch is resource-heavy. Pre-trained Arabic models act as a shortcut, giving developers a base that can be fine-tuned for specific tasks like sentiment analysis, translation, or conversational AI.

AraBERT: Modeled after Google’s BERT, but trained on Arabic text. Known for strong performance in classification and Q&A tasks.

AraGPT2: Adapted from GPT-2 for generating Arabic text. Useful for conversational AI and creative applications.

MARBERT: Specially designed to handle both Modern Standard Arabic and dialects, making it effective for noisy, informal sources like Twitter.

CAMeL Tools: Not just a model but a toolkit with multiple NLP utilities, including morphological analysis and dialect detection.

Datasets: The Knowledge Base of Arabic LLMs

Datasets are the textbooks from which LLMs learn. For Arabic, collecting clean, diverse, and balanced data is one of the hardest parts.

AraCorpus: A large-scale Arabic corpus covering news, books, and online sources.

OpenITI: Contains pre-modern and classical Arabic texts, offering depth for historical and literary applications.

OSCAR (Arabic subset): Extracted from Common Crawl, covering a wide range of modern Arabic content.

Arabic Wikipedia Dumps: A structured source of Modern Standard Arabic content, often used as a baseline.

Social Media Crawls: Critical for capturing dialects, slang, and informal writing styles that appear in everyday communication.

Annotation and Preprocessing Tools: Making Arabic Manageable

Arabic’s root-based structure, lack of diacritics, and dialect diversity make preprocessing essential. Annotation tools act like teachers marking up the text, helping AI recognise roots, meanings, and grammar.

MADAMIRA: A widely used toolkit for morphological analysis, tokenisation, and part-of-speech tagging.

Farasa: Lightweight and fast, ideal for tokenisation and named entity recognition.

Qalsadi: A Python library for root extraction and morphological analysis, particularly useful for handling Arabic’s complex word forms.

Together, these resources ensure that Arabic LLMs aren’t just technically sound but linguistically aware. Frameworks provide the structure, pre-trained models save time, datasets give knowledge, and annotation tools tackle the intricacies of Arabic grammar. Without these layers, building an effective Arabic LLM would be like trying to construct a skyscraper without steel, cement, or blueprints.

Applications of Arabic LLMs

So, where do Arabic LLMs actually make a difference? Beyond the research labs and benchmarks, their real value comes to life when businesses, governments, and communities use them to solve everyday problems. With 400+ million Arabic speakers spread across dozens of countries, the potential applications are wide-ranging — from customer service to healthcare. Let’s explore the most impactful use cases.

1. Customer Support and AI Agents

One of the fastest-growing applications of Arabic LLMs is in AI-powered customer support. Businesses in banking, telecom, retail, and travel rely heavily on customer interactions — and in MENA, that means Arabic-first support.

Chat AI Agents trained on Arabic LLMs can understand both Modern Standard Arabic (MSA) and dialects, offering quick, accurate responses.

Voice AI Agents go further by handling natural conversations over calls, detecting tone and intent while speaking fluently in Arabic.

For example, a Gulf bank deploying an Arabic Voice AI Agent can seamlessly handle loan queries or account updates in local dialects, freeing human agents to focus on complex cases.

2. Search and Knowledge Retrieval

Generic search engines often struggle with Arabic morphology and dialect variations. An Arabic LLM, however, can interpret meaning rather than just match keywords.

In e-commerce, this means showing the right product even if the user types it differently across dialects.

In knowledge bases, it means customers get accurate answers instead of being shown irrelevant results.

This makes Arabic LLMs essential for building context-aware Arabic search engines and chatbots.

3. Education and E-Learning

Education is one of the sectors that stands to benefit most. Imagine an AI tutor that doesn’t just translate English content into Arabic but actually teaches in the student’s dialect.

E-learning platforms can use Arabic LLMs to personalise lessons, simplify complex subjects, and engage students in a language they’re most comfortable with.

Teacher support tools can generate quizzes, summaries, or explanations tailored to different Arabic curricula.

This bridges the gap between digital learning and cultural context, making AI a true enabler in classrooms across the region.

4. Healthcare and Patient Interaction

In healthcare, clarity of communication can be the difference between correct diagnosis and confusion. Arabic LLMs are being used to:

Translate medical instructions into clear, dialect-specific Arabic.

Power Voice AI agents that collect patient information before consultations.

Support healthcare chatbots that answer basic health queries around-the-clock in Arabic.

This reduces the burden on doctors while making care more accessible to patients across MENA.

5. Government and Citizen Services

Governments in the GCC and North Africa are rapidly digitising citizen services. From renewing IDs to paying taxes, AI agents trained with Arabic LLMs provide faster, more accessible interactions.

They can understand colloquial queries like “جدد لي رخصة القيادة” (renew my driver’s licence) without requiring users to switch to formal MSA.

They help reduce queues at service centres by automating simple requests.

This is why governments are increasingly funding Arabic NLP research — to make public services more inclusive and efficient.

The adoption of Arabic LLMs goes far beyond technology. It’s about accessibility, inclusivity, and trust. When people can speak to AI in their own dialect and be understood, they’re more likely to engage with digital services, trust the brand, and stay loyal. Platforms like Verloop.io, with its Arabic chat and voice AI agents, are already putting this into practice — helping businesses serve millions of Arabic-speaking customers with accuracy and empathy.

Making Arabic LLMs Central to Your AI Strategy

Arabic is more than just another language — it’s a living, evolving ecosystem of dialects, cultures, and contexts. Building effective Arabic LLMs is not a technical luxury but a business necessity for companies and governments across MENA. From powering Voice AI Agents in banking to enhancing chatbots in education and healthcare, these models ensure that AI doesn’t just speak Arabic, but truly understands it.

As demand grows, the question is no longer “Do we need Arabic LLMs?” but “How quickly can we integrate them into our strategy?” Businesses that move first will not only gain efficiency but also build deeper trust with Arabic-speaking customers — a competitive advantage that’s hard to replicate.

If you’re looking to deliver authentic, dialect-aware, and scalable Arabic AI experiences, platforms like Verloop.io can help. With its advanced Arabic chat and voice AI agents, Verloop.io makes it easier for businesses to serve millions of users in their preferred language, while keeping conversations natural, context-aware, and impactful.

Take the next step: Explore how Arabic-first AI can transform your customer engagement — from awareness to loyalty.

FAQs

1. What is an Arabic LLM?

An Arabic Large Language Model (LLM) is an AI system trained specifically on Arabic text, including Modern Standard Arabic and dialects. Unlike multilingual models, it captures the unique grammar, morphology, and cultural context of Arabic, making responses more accurate.

2. Why do we need Arabic-specific LLMs?

Generic models often misinterpret Arabic idioms, slang, or dialectal variations. Arabic-specific LLMs provide culturally and linguistically relevant responses, which is vital for customer support, education, healthcare, and government services in MENA.

3. What are the biggest challenges in building Arabic LLMs?

Key challenges include Arabic’s complex root-based morphology, dialect diversity, script and orthography issues (e.g., missing diacritics), limited datasets, and risks of dialectal bias in training.

4. How do you create an Arabic LLM?

The process involves collecting diverse datasets, cleaning and normalising the text, tokenising Arabic words, training transformer-based models, evaluating accuracy, and fine-tuning for specific industries such as finance, education, or healthcare.

5. What datasets are used to train Arabic LLMs?

Popular datasets include AraCorpus, OpenITI, OSCAR (Arabic subset), Arabic Wikipedia dumps, and social media crawls. These sources ensure coverage of both Modern Standard Arabic and informal dialects.

6. What are some examples of Arabic NLP models?

Notable models include AraBERT, AraGPT2, MARBERT, and CAMeL Tools. Each is designed to handle different Arabic NLP tasks such as sentiment analysis, text generation, or dialect identification.

7. What are the applications of Arabic LLMs?

They’re used in customer support AI agents, Arabic voice assistants, education platforms, healthcare communication, and government digital services. These applications make services more accessible and user-friendly.

8. What is the future of Arabic LLMs?

The future lies in predictive and multimodal AI — models that not only understand Arabic text and speech but also anticipate intent, personalise experiences, and integrate across chat, voice, and even visual inputs.