Build a Bot: Your Guide to Build Your Own AI Agent

- July 30th, 2025 / 7 Mins read

-

Aarti Nair

Building an AI agent isn’t just a technical task anymore — it’s a strategic move that defines how businesses engage, support, and scale.

According to recent market research, the AI agent market was valued at $5.25 billion in 2024 and is expected to surge to $52.62 billion by 2030, growing at a CAGR of 46.3%. What’s driving this explosive growth? A significant shift from basic, rule-based bots to intelligent agents powered by foundational models.

Unlike traditional automation, modern AI agents can understand context, make decisions, and execute complex, multi-step tasks — autonomously. Whether it’s handling thousands of support queries across channels or acting as a co-pilot for human teams, these agents are becoming central to digital operations.

In this blog, we’ll break down how to build an AI agent from the ground up, covering everything from setting goals and choosing the right architecture to training with LLMs and deploying in real-world scenarios.

Let’s get started.

What is an AI Agent?

An AI agent is a software-based system designed to perform tasks autonomously by perceiving its environment, processing inputs, and taking actions to achieve defined goals. Unlike traditional bots that follow pre-programmed rules, AI agents can make context-aware decisions, and often improve over time using machine learning.

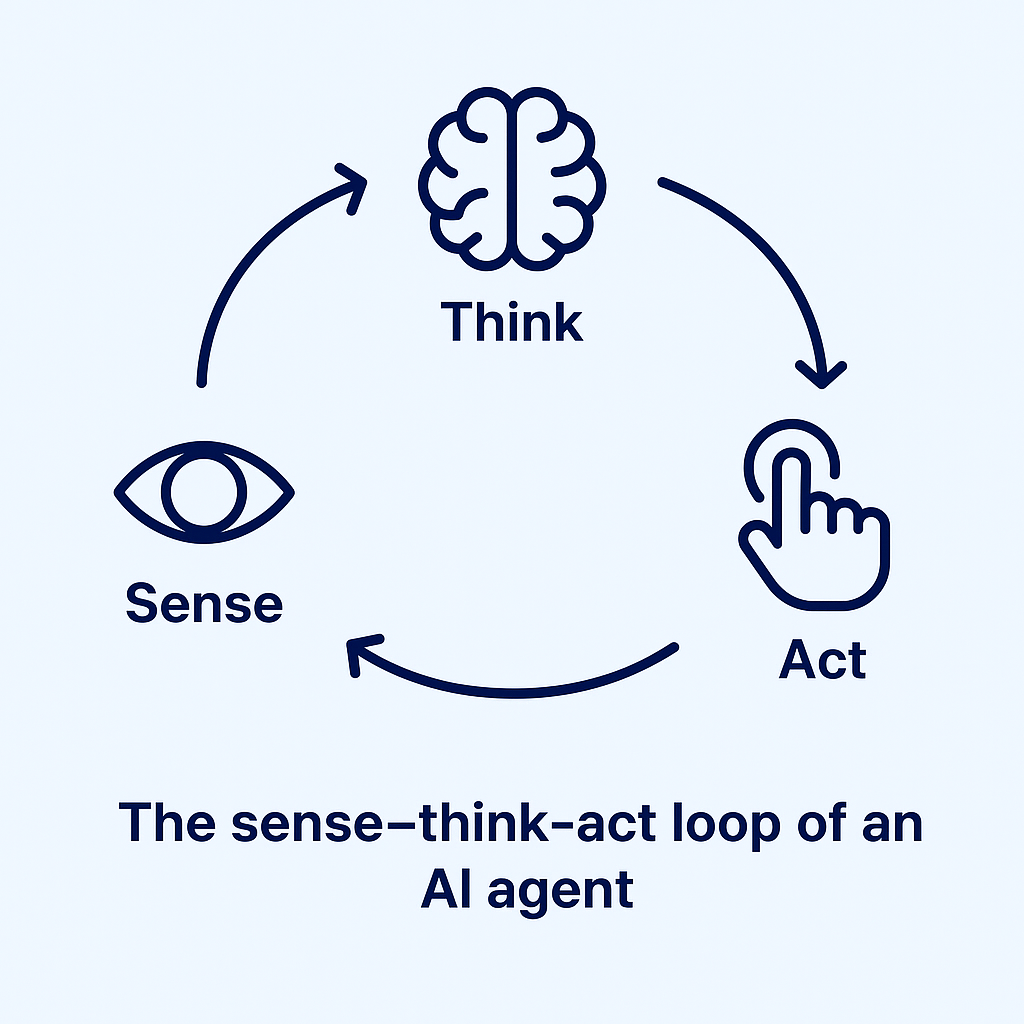

At their core, AI agents operate on the sense–think–act loop:

-

Sense: They collect data from inputs like user messages, sensor feeds, APIs, or databases.

-

Think: They interpret that data using models (such as LLMs), rules, or a combination of both.

-

Act: Based on the interpretation, they respond or take action, like replying to a customer, escalating an issue, or triggering an automation.

What makes modern AI agents especially powerful is their ability to handle complex, multi-step workflows. With the help of foundation models (like GPT or Claude) and frameworks such as agentic RAG, these agents can go beyond scripted responses. They can summarise information, query knowledge bases in real time, adapt tone based on user emotion, and even decide when to bring in a human.

In customer support, for instance, an AI agent could:

-

Greet the user

-

Fetch order details via API

-

Detect frustration from language

-

Escalate the case to a live agent if needed

All of this, with no manual hand-holding.

Next, we’ll look at the key building blocks behind these intelligent agents.

Key Building Blocks Behind AI Agents

Building a high-functioning AI agent isn’t just about adding intelligence to a chatbot. It requires a carefully orchestrated tech stack that supports perception, reasoning, memory, and action. Below are the foundational components that make modern AI agents capable of handling complex tasks autonomously:

1. Foundation Models (LLMs)

At the core of most advanced AI agents lies a Large Language Model (LLM) like GPT or LLaMA. These models enable agents to understand user intent, generate human-like responses, and perform reasoning. With fine-tuning and prompt engineering, LLMs can be customised to specific domains like healthcare, finance, or customer support.

Foundation models are what make AI agents “smart”—capable of dynamic, unscripted conversations.

2. Retrieval-Augmented Generation (RAG)

RAG architectures allow agents to pull up-to-date information from internal knowledge bases or external sources before generating a response. Instead of relying solely on what the model was trained on, RAG helps your agent stay relevant and accurate.

It eliminates hallucinations and enables fact-based responses from real-time data.

3. Memory Store

Memory is what separates a reactive chatbot from an intelligent agent. Memory systems help track user preferences, previous conversations, and ongoing tasks across sessions.

Persistent memory lets your AI agent build context over time, crucial for multi-turn interactions or long-term relationships.

4. Tool Use / Action Layer

Agents aren’t just conversational—they’re actionable. Integrations with third-party tools (CRMs, APIs, databases, or schedulers) allow the AI agent to do things, such as booking appointments, issuing refunds, or triggering workflows.

Without this layer, your agent is just a talking head. With it, it becomes an operational assistant.

5. Orchestration Framework

This is the layer that decides how the agent thinks and acts. It includes state tracking, decision trees, and action chaining. Newer agentic frameworks like LangGraph, CrewAI, and AutoGen let you manage multi-agent flows, retries, memory scopes, and condition-based actions.

Think of this as the brain’s executive function that manages logic, order of operations, and fallback plans.

6. Voice & Multimodal Interfaces (Optional)

Voice AI and multimodal support (text + image + voice) are becoming increasingly common, especially in industries like healthcare, travel, and customer service. Voice activity detection (VAD), speech synthesis (TTS), and emotion detection enhance natural interactions.

If your agent is speaking to users, voice latency, tone, and pronunciation become critical UX factors.

How to Build and Test an AI Agent?

The real power of AI agents lies in their ability to go beyond static Q&A. Today’s agents can independently make decisions, remember user context, and trigger actions across multiple systems — all without human hand-holding.

Let’s break down how to build your own AI agent from ideation to testing it.

Step 1: Define Your Agent’s Purpose

Before building your AI agent, get clear on its role, just like writing a job description. Whether it’s handling post-purchase queries, qualifying leads, or resetting passwords, defining the purpose sets the foundation for everything else.

Here are the key questions to ask:

-

What’s the primary goal of the agent? (e.g. reduce support load, improve resolution time)

-

Who is the end-user? (customers, employees, partners?)

-

Where will the agent operate? (WhatsApp, web chat, IVR, Slack?)

-

What kind of queries or workflows will it handle? (simple FAQs vs. complex multi-step tasks)

-

How should it behave when it doesn’t know something? (retry, escalate, fallback?)

-

What business metrics will define its success? (CSAT, deflection rate, conversions?)

Think of this as giving your AI agent a clear purpose before you give it a voice.

Step 2: Choose the Right Brain — Your Foundation Model

Once your agent has a purpose, it needs a brain and that’s the LLM (Large Language Model). This is what helps it understand human input, generate coherent responses, and adapt its tone and style.

You’ve got two main paths:

1. Hosted APIs (Quick Start)

-

OpenAI (GPT-4)

-

Anthropic (Claude)

-

Google Gemini

-

Cohere

These are plug-and-play, making them ideal if you’re building fast and don’t want to manage infra.

2. Open Source (Customisable but heavy-lift)

-

LLaMA

-

Falcon

-

Mistral

These give you more control and can be fine-tuned, but you’ll need MLOps chops and hosting setup.

💡 Pro tip: Start with a hosted LLM. You can layer on guardrails like prompt templates to manage tone, structure, and what the agent should or shouldn’t say.

Want your agent to use your internal docs or data?

Plug it into a vector database and use RAG (Retrieval-Augmented Generation) to pull real-time, context-aware answers.

Step 3: Add Memory — Because Nobody Likes Repeating Themselves

Imagine messaging a brand’s support bot about an order issue, only to have it forget what you said two replies ago. Frustrating, right?

That’s what happens when your AI agent lacks memory.

For conversations to feel natural — and helpful — your agent needs to remember what’s going on, both in the short term and across longer journeys.

1. Short-term Memory

Tracks what the user has said within the current conversation.

-

Useful for multi-turn tasks like order tracking or appointment booking

-

Tools:

ConversationBufferMemoryin LangChain, session state handling, Pinecone (light usage)

2. Long-term Memory

Stores data beyond a single session, like past purchases, user preferences, or previous issues.

-

Ideal for loyalty experiences, upsells, and ongoing support

-

Tools: Vector databases like FAISS, Weaviate, or Chroma

A memory-enabled agent doesn’t just answer questions — it builds a relationship. And that’s the difference between “just a chatbot” and a smart AI assistant.

Step 4: Connect the Dots — Let Your Agent Take Action

So far, your AI agent can talk and remember. But now it needs to do. Answering questions is great, but real value comes when you can act on behalf of your user.

Think:

→ “Where’s my order?” → The agent fetches real-time status from your CRM.

→ “Reschedule my delivery” → It updates the time slot via your logistics API.

→ “Send me a payment link” → It generates one via Razorpay and shares it instantly.

To enable this, you’ll need to integrate with your backend systems. This is where APIs come in.

💼 Common integrations:

-

CRM & Lead Management: Salesforce, HubSpot, LeadSquared

-

Payment Systems: Razorpay, Stripe

-

Analytics & Engagement: MoEngage, CleverTap

-

Calendars & Scheduling: Calendly, Google Calendar

-

Channels: WhatsApp, Voice, Email, SMS

With tools like OpenAI’s Function Calling, LangChain’s Tool abstraction, or custom APIs, your agent can now trigger these actions mid-conversation — securely and contextually.

Here’s a simple function call format in OpenAI to illustrate:

“name”: “get_order_status”,

“description”: “Fetches order status from CRM using order ID”,

“parameters”: {

“type”: “object”,

“properties”: {

“order_id”: {

“type”: “string”,

“description”: “The order ID provided by the user”

}

},

“required”: [“order_id”]

}

}

Your agent isn’t just answering anymore. It’s executing.

Step 5: Give It a Brain — Choosing the Right Orchestration Framework

Now that your AI agent can talk, remember, and act — the question is: how does it decide what to do, and when?

This is where orchestration frameworks step in. Think of them as the control tower for your AI — coordinating memory, tools, and logic behind the scenes.

Without one, your agent is just reacting. With one, it can plan, recover from failures, and prioritise tasks in a structured way, like a real assistant would.

Popular frameworks:

-

LangGraph (LangChain): Best for managing stateful, multi-step flows

-

AutoGen / CrewAI: Designed for complex, multi-agent coordination (e.g. researcher + writer bots)

-

Haystack Agents: Ideal for retrieval-augmented tasks (e.g. querying large document sets)

Tip: Choose based on your agent’s complexity. If it’s just handling support FAQs, LangGraph might be enough. But for a research agent handling tasks with dependencies and retries, go with something more robust like AutoGen.

At this stage, you’re not just building an assistant but more like you’re designing how it thinks.

Step 6: Give Your Agent a Personality (and a Voice)

You’ve built a smart, capable agent — but now it needs character. Personality isn’t just flair; it’s what makes your agent feel human, relatable, and brand-aligned.

Think about it: Would you rather talk to “Support Bot #57” or “Aria from Verloop — your 24/7 shopping guide”?

This is where persona design comes in. Set up:

Voice & Personality

-

Tone: Friendly, witty, professional, empathetic — match your brand voice.

-

Name & Backstory: Give your agent a name, a purpose, even a tone guide.

-

Fallbacks: How should it handle “I don’t understand”? Use graceful, helpful responses.

Multilingual Support

Want to scale globally? Let your agent understand and reply in local languages. Tools like Google Translate APIs or native multilingual LLMs (like Mistral) help.

Voice AI

For voice agents, conversation should feel like — well, a real conversation.

-

Speech-to-Text (STT): Whisper, Deepgram

-

Text-to-Speech (TTS): ElevenLabs, Google TTS

-

Voice Activity Detection (VAD): For turn-taking without interruptions

Pro Tip: Use ONNX-optimised Silero with WebRTC-based VAD to keep latency under 300ms — crucial for smooth, natural-feeling calls.

At this stage, you’re not just building an agent. You’re building a brand extension — one that customers will remember.

Step 7: Test Before Going Live

You wouldn’t launch a new product without QA — so don’t release your AI agent blind. Testing isn’t just about catching bugs; it’s how you ensure the agent delivers a consistent, on-brand experience across every interaction.

Start with the basics.

Unit-Test the Brains

Check all the logic — are API calls returning the right data? Is the CRM integration working? Are timeouts and retries in place?

Stress-Test the Smarts

Your prompts might work in theory, but what about under pressure? Use tools like LangSmith, PromptLayer, or TruLens to simulate:

-

Edge-case inputs

-

Hallucination-prone questions

-

Multi-turn interactions

This helps measure how your LLM responds across scenarios — whether it’s crystal clear or gets stuck in loops.

Simulate Full Conversations

Now zoom out. Test end-to-end flows across paths:

-

Happy paths: user completes the task smoothly

-

Error paths: invalid inputs, timeouts, no data

-

Interruptions: user changes topic, goes silent, or jumps steps

Track What Matters

Once your agent is live, keep watching:

-

First response time

-

Tool success/failure rates

-

Drop-offs or friction points

-

CSAT from live users

Platforms like Verloop.io make this easier with:

-

A single dashboard to track all chat + voice conversations

-

Agent activity and performance reports

-

Sparks: AI-powered audits that flag quality gaps automatically

Building the agent is just the start. Testing and tuning? That’s where it earns its ROI.

Testing Checklist for AI Agents

Before hitting publish, run through this checklist to ensure your AI agent is truly production-ready, not just functional, but frictionless.

Cognitive Accuracy

-

Does the agent understand varied phrasing and intent?

-

Can it handle edge cases without hallucinating?

-

Are the LLM responses concise, relevant, and brand-safe?

Integration Health

-

All external APIs (CRM, payment, search, etc.) working as expected?

-

Timeouts, retries, and error messages handled gracefully?

-

Is fallback to human escalation tested?

Conversational Flow

-

Do all key flows (FAQ, lead capture, troubleshooting, etc.) work end-to-end?

-

Are conversation interruptions, topic switches, or looping scenarios resolved cleanly?

-

Can users exit, restart, or ask for help at any point?

Multichannel & Multilingual

-

Does the agent behave consistently across web, app, WhatsApp, voice, etc.?

-

Are translations accurate and tone-appropriate?

Latency & Real-Time Behaviour

-

For voice: Is VAD working to avoid awkward silences or interruptions?

-

Is response time acceptable (<300ms for voice, <2s for chat)?

Metrics & Monitoring

-

Are analytics tools in place (Langfuse, Spark, PromptLayer)?

-

Can you track drop-offs, agent usage, CSAT, and NPS?

-

Are error logs and alerts integrated with your observability stack?

Running this checklist won’t just help you avoid issues — it’ll ensure your agent earns trust from Day 1.

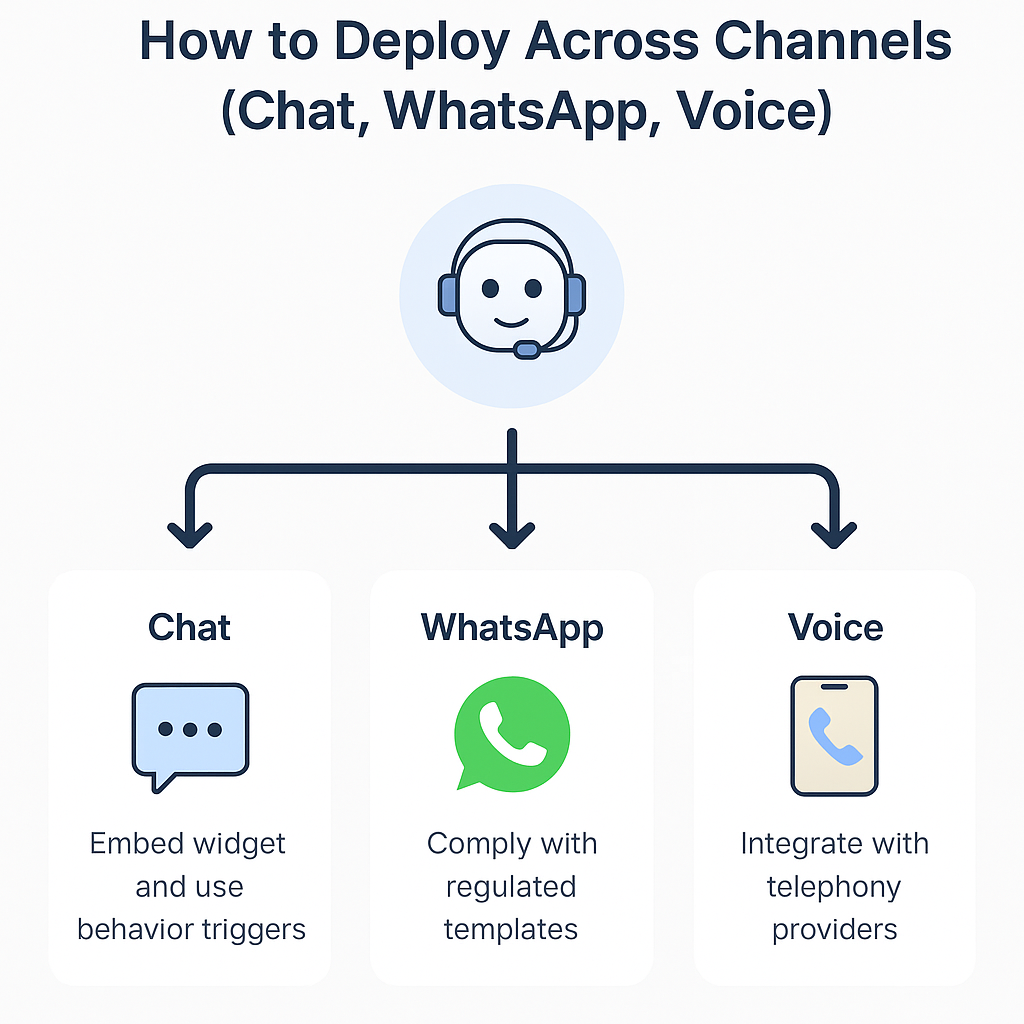

Deploying Your AI Agent Across Channels

You’ve built the AI agent. It understands context, responds in natural language, and even remembers past conversations. Now comes the real test, can it show up wherever your customers are?

To truly deliver on that promise, your AI agent must not only adapt to each channel’s quirks but create a unified experience across all of them.

Let’s walk through how to make that happen, one channel at a time.

Chat

Whether it’s your homepage, product page, or app support screen — chat is where most customer journeys begin.

But it’s not just about placing a bot in the corner of your screen. It’s about timing, context, and continuity.

What to do:

-

Embed with a widget builder: Platforms like Verloop.io allow you to customise how, when, and where your agent shows up.

-

Trigger conversations based on behaviour: Is the customer idling on a pricing page? Abandoning a cart? Set your agent to engage at those key moments.

-

Keep agents and bots in one thread: A conversation that starts with the bot shouldn’t restart when it reaches a human. Use a shared dashboard with full transcript visibility.

With over 2 billion active users, WhatsApp isn’t just a messaging app — it’s a business lifeline, especially in APAC, LATAM, and EMEA.

But unlike web chat, WhatsApp comes with strict rules around opt-ins, templates, and time windows. It’s easy to get blocked. So, compliance isn’t optional — it’s your strategy.

How to make it work:

-

Use an official BSP like Verloop.io: This gives you verified sender access, better delivery rates, and full access to template and campaign tools.

-

Design flows for WhatsApp-first behaviour: Think FAQs, transactional updates, order tracking, and feedback collection — not just “Hi, how can I help?”

-

Respect opt-ins and privacy: Always start with clear consent, and make it easy for users to opt out too.

Voice

Voice feels natural to humans, but challenging for machines. Timing, accents, background noise, interruptions — they all make voice automation harder than chat.

But with the right tech stack, your AI agent can become a voice support hero.

Here’s what helps:

-

Integrate with your telephony provider: Whether it’s Twilio, Exotel, or your own SIP line — your voice AI needs a stable line to work from.

-

Use VAD for real-time turn-taking: Voice Activity Detection ensures your agent doesn’t interrupt or stay awkwardly silent. It speaks when you stop and listens when you talk — just like a human.

-

Escalate with context: If a customer says “Speak to a human,” transfer the call — but carry over the chat history, customer ID, and interaction intent.

One Agent, Consistent Experience

What matters most isn’t just being present on every channel — it’s showing up with consistency.

- Same persona and tone

- Unified knowledge base

- Central reporting dashboard

- Shared memory between chat, voice, and WhatsApp

When your agent feels like the same helpful presence across touchpoints, that’s when automation starts feeling personal, and customers feel understood, not processed.

Building Agents That Actually Work

Creating an AI agent isn’t just about plugging in a chatbot and calling it a day. It’s about designing an experience — one that’s intelligent, human-like, and capable of solving real problems at scale.

From defining your use case and designing a strong persona, to training on contextual data and deploying across multiple channels — each step matters. And with the rise of foundation models and multimodal LLMs, AI agents are no longer limited to answering basic queries. They’re evolving into reliable co-pilots for both customers and support teams.

Whether you’re starting with chat, going live on WhatsApp, or launching a voice bot, the key is testing, iteration, and alignment with your support goals. Build once. Improve always.

FAQs on Building AI Agents

1. What’s the difference between a chatbot and an AI agent?

A chatbot typically follows scripted rules or decision trees. An AI agent, especially one powered by LLMs, understands context, learns from interactions, and can carry out multi-step tasks with autonomy.

2. Do I need to know how to code to build an AI agent?

Not necessarily. Many platforms like Verloop.io offer no-code or low-code tools. However, technical know-how can help if you’re integrating APIs or custom workflows.

3. How long does it take to build an AI agent?

It depends on complexity. A basic bot can go live in a day. A full-fledged AI agent with integrations and multichannel deployment may take 2–4 weeks, including testing.

4. What data should I train my AI agent on?

Start with historical chat transcripts, help centre content, and FAQs. The more domain-specific and contextual the data, the better the agent performs.

5. Can the same AI agent work across voice, chat, and WhatsApp?

Yes, with the right platform. Omnichannel deployment ensures your agent maintains one brain and consistent experience across channels.

6. How do I know if my AI agent is successful?

Track metrics like first response time, containment rate, CSAT, and fallback rate. Regularly review conversations using quality audit tools like Verloop.io’s Spark.