4 BIG Challenges Prevalent in Speech Recognition in AI Agent Voice

- August 22nd, 2025 / 5 Mins read

-

Aarti Nair

4 BIG Challenges Prevalent in Speech Recognition in AI Agent Voice

- August 22nd, 2025 / 5 Mins read

-

Aarti Nair

Speech recognition is difficult to implement in real-time environments. We list the problems that have to be tackled in speech-to-text conversion and solutions for them.

I’m sorry, can you repeat that?

Could you repeat what you just said to me, I didn’t understand.

Sorry, I didn’t quite catch what you said. Can you say that again?

You must have heard your voice assistant ask you that, or a variation of it, on multiple occasions. Or worse, they just go quiet on you.

Voice recognition technology has come a long way since the concept came to light in the 1950s. Over time, users have one persisting problem with voice recognition, and that is accuracy.

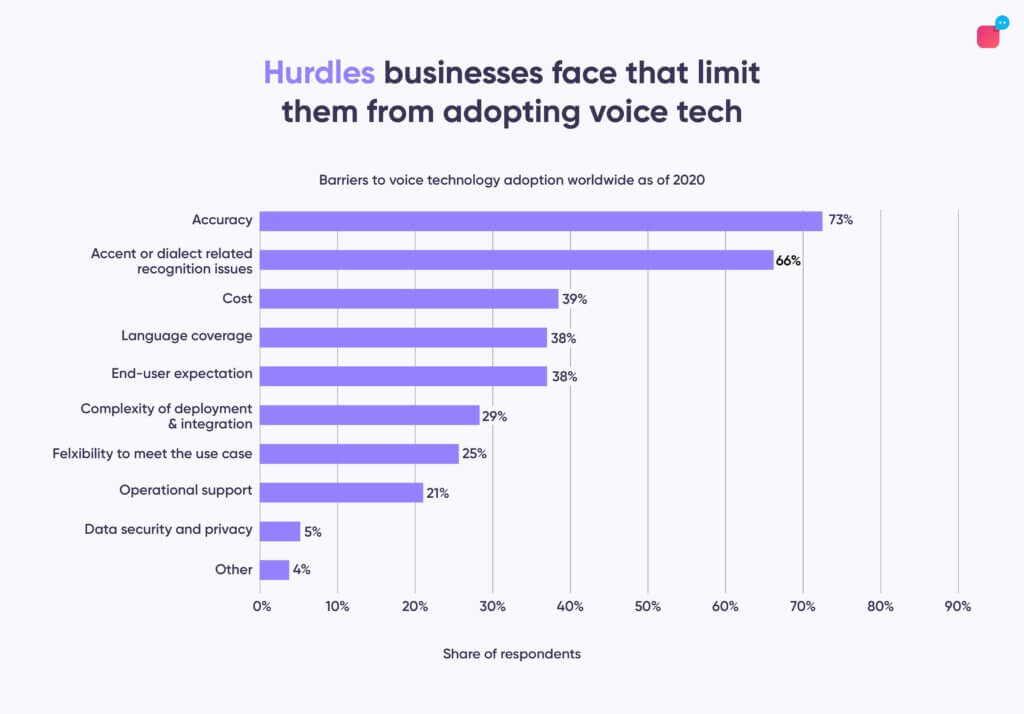

No wonder 73% of businesses believe lack of accuracy is the key reason why they don’t use voice technology. This is why building AI algorithms that accurately process voice input has been consistently focal in the R&D of speech recognition.

And why wouldn’t that be? Even with barriers to adoption, voice AI is highly popular among digital consumers today. As many as 65% of users between the age of 25-49 speak to their voice-enabled devices every day.

Look at speech as the fastest way to jump over the clutter to arrive at the right spot in the shortest time possible. This is truly what most users today want and expect – to arrive at the right place at the right time. Indeed, automatic speech recognition (ASR) holds the key to amazing experiences brands may be missing to deliver to the users in the post-pandemic world.

What is Speech Recognition and How Does It Work in AI Agents?

Speech recognition is the technology that enables machines to understand and process human speech. In simple terms, it converts spoken language into text or structured data that computers can act on. This capability is the backbone of many modern AI agent voice solutions, from virtual assistants like Siri or Alexa to enterprise-grade Voice AI Agents used in customer support.

So, how does it work?

When a person speaks, the AI agent captures the audio signal, breaks it down into sound waves, and applies acoustic models and language models to recognise words and phrases. Modern platforms often use Large Language Models (LLMs) on top of this, which help the agent interpret context, reduce errors, and generate more natural responses.

For Voice AI Agents, speech recognition plays a critical role in enabling real-time, human-like conversations. Without accurate recognition, the AI cannot understand customer queries, provide relevant answers, or execute tasks.

Examples in action:

-

Call centres: AI agents transcribe customer queries instantly, route calls, and even resolve common issues without human intervention.

-

Healthcare: Doctors can use speech-enabled AI to capture notes, retrieve patient data, or provide voice-guided assistance.

-

Banking & BFSI: Customers can check balances, report fraud, or apply for loans through Voice AI Agents that understand and act on speech.

In short, speech recognition is not just a feature — it’s the foundation that determines how well an AI agent voice solution can deliver on accuracy, empathy, and efficiency.

Why is Speech Recognition Important for Voice AI Agents?

For any Voice AI Agent, speech recognition isn’t just a technical feature — it’s the foundation of how customers experience your brand. The way an AI agent listens, understands, and responds shapes whether a conversation feels natural and helpful, or robotic and frustrating.

1. Improving Customer Experience

When speech recognition works well, conversations flow seamlessly. Customers can speak in their own words, at their own pace, without having to repeat themselves or stick to rigid commands. This creates natural, human-like interactions that build trust and reduce friction.

2. Driving Business Efficiency

Accurate recognition allows AI agents to resolve queries instantly, from checking order status to booking appointments. This translates into shorter handling times, lower support costs, and less dependency on human agents for routine queries. Businesses benefit from scaling their support without scaling headcount.

3. Supporting Growth and Scalability

As companies expand, they face more calls, across more regions and languages. Robust speech recognition makes it possible for Voice AI Agents to serve thousands of customers simultaneously, ensuring no call goes unanswered while maintaining consistent service quality.

4. Accuracy and Brand Consistency in B2B

For B2B brands, precision and tone are non-negotiable. A misinterpreted request in finance or healthcare isn’t just inconvenient — it can be costly. Likewise, if your AI agent doesn’t “sound” consistent with your brand values, customers notice. Strong speech recognition paired with speech profiling ensures every response is both accurate and aligned with your brand voice.

In short, the importance of speech recognition in Voice AI for businesses lies in its dual role: making interactions smoother for customers while delivering measurable efficiency and cost savings for companies.

4 challenges you will face building your automatic speech recognition system (ASR)

Though we know the potential ASR holds, building an algorithm that’s highly accurate and intuitive, while no impossible knot, may still be a tough nut to crack. So, what are the top ASR challenges you may face when adopting the tech?

1. Lack of lingual knowledge

What makes speech recognition difficult is the lack of language training.

Companies often seem to overlook the fact that English is not the universal language. So expecting users from different geographies to have the same level of proficiency is unrealistic. In fact, 38% of users are hesitant to adopt voice technology because of AI’s language coverage.

If you are trying to deploy your voice assistants in a location, the ASR will likely tank if not trained on specific language models of the region. And even when it is trained for the language, another challenge for ASR is the ability to differentiate between varying dialects and accents for more accurate interpretation.

For example, a user who needs groceries may say “Buy vege-table” to the voice assistant, pronouncing the word a bit differently than the widely accepted “veg-tible” – also the only one AI is familiar with. A poorly trained bot may mistake this input as “buy a veggie table” assuming the speaker wants to buy a table – Highly inaccurate!

2. Peripheral background sounds

Another top speech recognition problem that needs a solution is – noise. It is everywhere! And so, it becomes the job of the ASR solution to accurately catch the speech input through unwanted sounds. An ASR should be able to pick up the input’s sound waves even from a distance in a room riddled with white noise and cross-talk. Echo, for example, also adds to the imprecision. Reflected sound waves from surfaces in the space distort the receptor’s ability to process the actual input unerringly.

3. Low data reliability of ASR

What are the other challenges of speech recognition? Data privacy. While we are making progress in the field of AI, many users are still hesitant to use ASR bots to handle tasks that involve sensitive data and money. Data privacy is sovereign to users who wish to exercise some level of governance and transparency with their information.

PWC says that one of the three main reasons why users are scared to experiment with voice tech is simply a lack of trust. Where more than half of the users use their voice assistant to buy online, all of these purchases are trivial with low spending. And so, data concerns remain the challenges and issues businesses face in adopting speech recognition technology. Users don’t trust voice assistants as much, so businesses must be prepared to face reluctance in adoption from their market.

Costs and deployment

Other reasons why speech recognition is difficult to implement in real-time environments are because of the capital and infrastructure needed.

Implementing an ASR system needs a far-sighted vision. It’s a long game and not a change that occurs overnight. Bearing this in mind, you need to be prepared to handle the time, resources, and capital involved in building, testing, and deploying the system in the market. For example, the lack of visual elements makes designing interactive voice user interfaces (VUIs) more complex than designing UI for chatbots.

Another disadvantage of speech recognition can be that training language models take considerable time and expertise. Gathering enough language resources or effectively making do with the available ones may not come cheap. All in all, manual development would rain heavily on your pockets.

How can you overcome challenges and issues in adopting speech recognition technology?

Implementing an automatic speech recognition system today is a sure-shot way to stand out from the crowd that still uses outdated ways.

With new possibilities, come challenges. Turning the disadvantages of speech recognition into your strengths requires work. For example, one way to train your ASR to perform well is by seeding it through non-ideal training environments and reducing noise from the audio input before speech-to-text (STT) conversion.

Where user intent and localisation come into the picture, businesses need to focus on specific regions. Ideally, an ASR model pre-trained on multiple intents and languages with a focus on dialects/accents is a win-win. The speech recognition system identifies the language regardless of the speaker’s accent or place of origin (dialect differs).

Just like what Voice by Verloop.io does! State-of-the-art ASR by Verloop.io is custom-trained on 1000s of hours of voice data and natively supports 20+ languages (in all shapes, forms, and sizes!) to ensure accuracy at an affordable cost. Despite this, noise suppression and reduction pose a key challenge for voice technology adopters. To ensure you don’t put your user privacy at risk, researching the security protocols ASR providers follow is a great start.

Learn how Verloop.io Improved its ASR accuracy with error correction techniques here.

What Are the Best Practices to Overcome Speech Recognition Challenges?

While speech recognition challenges like accents, background noise, and latency are real, businesses can overcome them by choosing the right platforms and applying proven practices. Here are some of the most effective ways to improve Voice AI Agent performance:

1. Use Platforms Trained on Diverse Datasets

The broader the training data, the better the AI understands accents, dialects, and industry-specific jargon. For example, a retail customer in London may sound very different from one in Mumbai — yet both expect the AI agent to understand them instantly. By using models trained on global, multi-accent datasets, businesses reduce errors and make interactions smoother.

2. Employ Noise Suppression and Voice Activity Detection (VAD)

Customers don’t always call from quiet rooms. Many conversations happen in busy streets, airports, or noisy call centres. Noise suppression filters out background sounds, while Voice Activity Detection (VAD) helps the AI identify when a speaker starts and stops talking. This ensures responses are timely and accurate, even in challenging environments.

3. Combine LLMs with Contextual Retrieval for Accuracy

Speech recognition isn’t just about words — it’s about meaning. Homophones and ambiguous phrases often confuse traditional systems. By combining Large Language Models (LLMs) with Retrieval-Augmented Generation (RAG), Voice AI Agents can interpret intent based on context. For instance, if a customer says “I need to book a charge”, the system can use context (banking vs. telecom) to interpret the request correctly.

4. Invest in Speech Profiling for Brand Consistency

Even with high accuracy, a robotic-sounding agent can damage customer trust. Speech profiling allows businesses to define tone, pitch, and pronunciation so the Voice AI Agent always sounds aligned with the brand. A healthcare provider may prefer a calm, empathetic tone, while an e-commerce brand may choose a friendly, upbeat voice. This consistency ensures customers recognise and trust your brand every time they interact with your AI.

The best Voice AI solutions don’t just recognise speech — they understand, adapt, and respond in ways that are both accurate and brand-consistent. By following these best practices, businesses can transform speech recognition from a potential barrier into a competitive advantage.

Building the Future of Voice AI with Reliable Speech Recognition

Speech recognition is the backbone of every Voice AI Agent. It determines how accurately the AI can understand customers, how naturally it can respond, and how consistently it can represent your brand. But as we’ve seen, businesses must still navigate challenges like accents, background noise, contextual ambiguity, and latency before they can fully unlock the benefits.

That’s why choosing the right platform matters. The goal isn’t just to automate calls — it’s to deliver conversations that are accurate, human-like, and aligned with your brand voice.

At Verloop.io, our Voice AI Agents are designed with these principles at the core. From advanced speech profiling that ensures brand consistency, to low-latency responses that make conversations feel seamless, Verloop.io helps businesses overcome the common hurdles of speech recognition while scaling customer experience.

The future of speech recognition in AI Agents isn’t about replacing humans — it’s about combining intelligence, empathy, and consistency so every customer interaction builds trust. Brands that prioritise this today will be the ones customers remember tomorrow.

FAQs

1. What is speech recognition in Voice AI Agents?

Speech recognition is the process of converting spoken language into text so that an AI agent can understand and act on it. In Voice AI Agents, this technology enables real-time conversations with customers, allowing the AI to interpret requests, provide answers, or execute tasks without human intervention.

2. Why is speech recognition important for businesses?

Accurate speech recognition is essential for delivering smooth, human-like customer experiences. It helps reduce call handling times, automate repetitive tasks, and ensure that Voice AI Agents can scale support across industries like banking, e-commerce, and healthcare. Without reliable speech recognition, conversations can feel robotic and frustrating.

3. What are the biggest challenges in speech recognition today?

Some of the main challenges include:

-

Handling accents and dialects across global audiences.

-

Dealing with background noise in real-world environments.

-

Understanding ambiguous phrases and homophones.

-

Maintaining low latency for real-time conversations.

4. How can businesses test speech recognition accuracy?

Businesses can measure speech recognition by evaluating Word Error Rate (WER), latency, and contextual accuracy. Testing should include a variety of accents, noisy environments, and industry-specific terms. Platforms like Verloop.io optimise for these factors to ensure reliable, real-world performance.

5. Can Voice AI Agents replace human agents completely?

No — and they shouldn’t. Voice AI Agents are best at handling routine queries quickly and accurately. Human agents remain essential for complex, escalated, or emotionally sensitive issues. The best customer experiences come from a balance of AI speed + human empathy.

6. How does Verloop.io help solve speech recognition challenges?

Verloop.io’s Voice AI Agents are built with speech profiling, low-latency response systems, and contextual intelligence to overcome common recognition issues. They ensure conversations are accurate, brand-consistent, and seamless — while providing smooth handoff to human agents when needed.